Overview

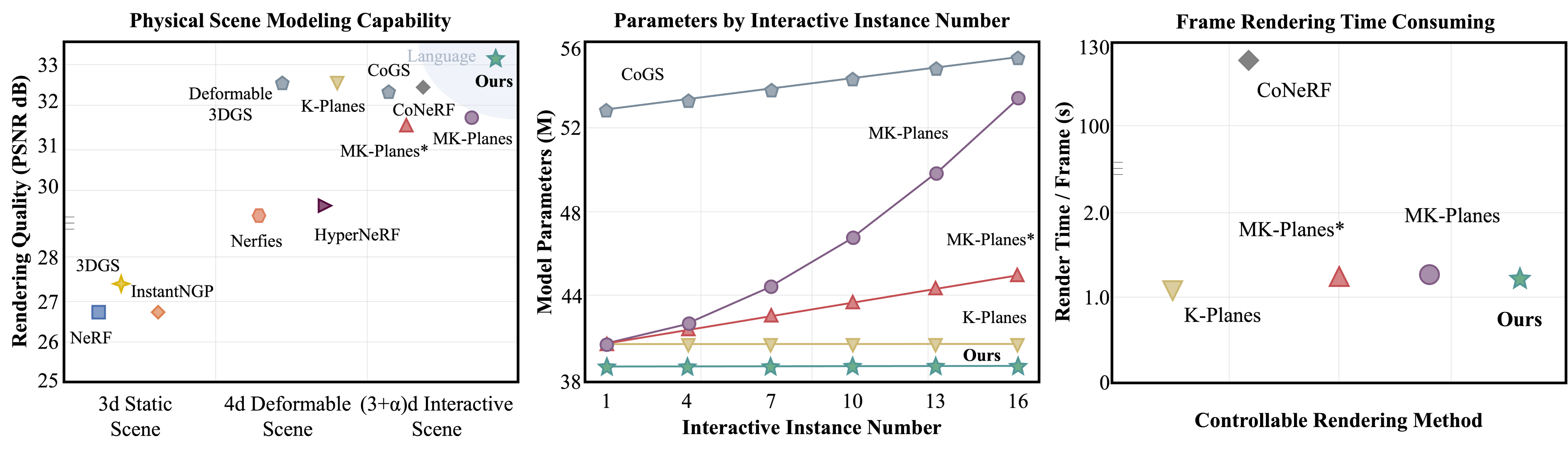

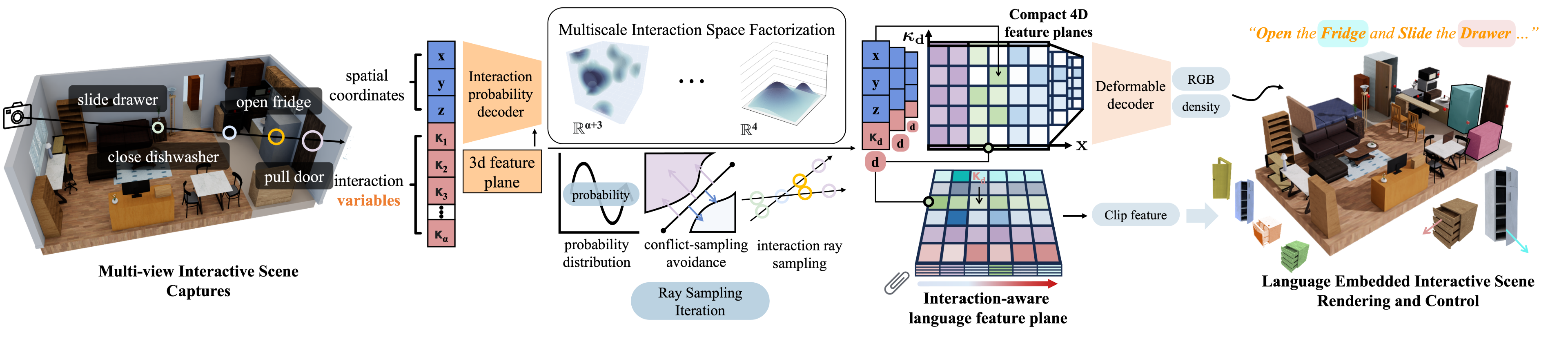

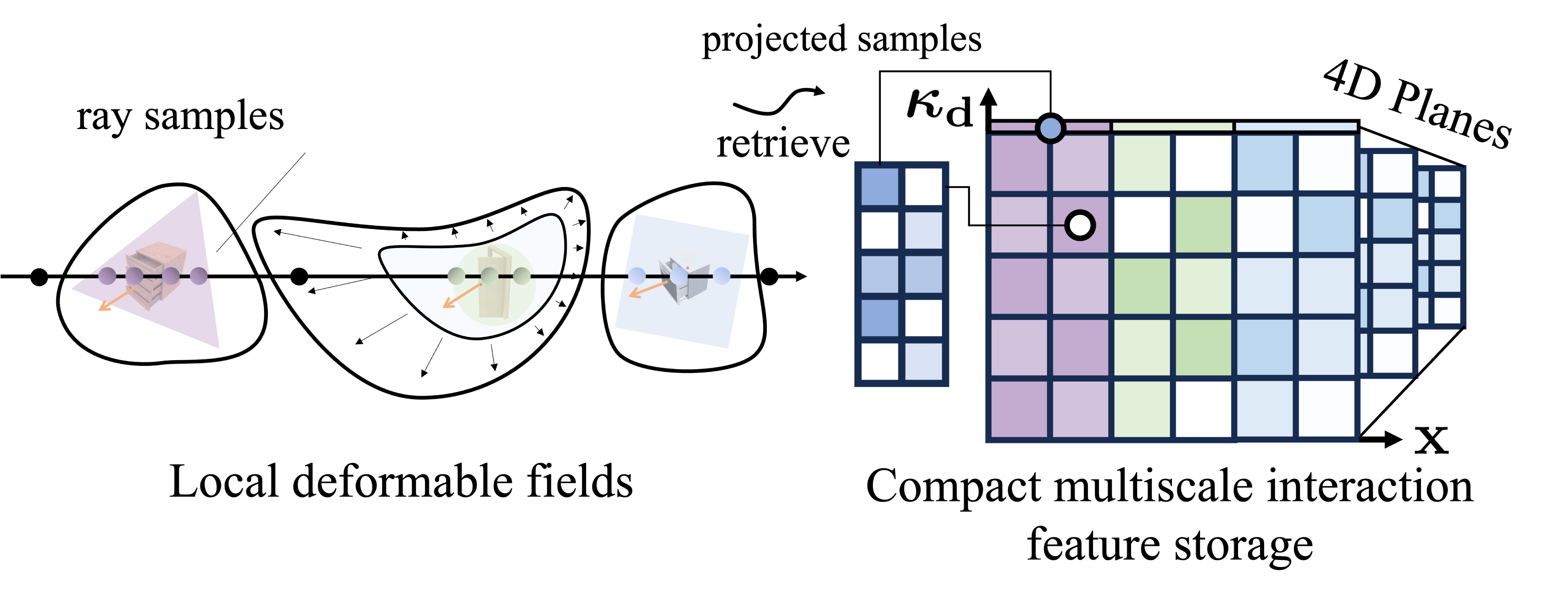

LiveScene aims to advance the progress of physical world interactive scene reconstruction by extending the interactive object reconstruction from single object level to complex scene level. To accurately model the interactive motions of multiple objects in complex scenes, LiveScene proposes an efficient factorization that decomposes the interactive scene into multiple local deformable fields to separately reconstruct individual interactive objects, achieving the first accurate and independent control on multiple interactive objects in a complex scene. Moreover, LiveScene introduces an interaction-aware language embedding method that generates varying language embeddings to localize individual interactive objects under different interactive states, enabling arbitrary control of interactive objects using natural language.

Contributions:

- The first scene-level language embedded interactive radiance field that efficiently reconstructs and controls complex physical scenes, allowing for the control of multiple interactive objects within the neural scene with diverse interaction variations and language instructions.

- An efficient space factorization and sampling technique that decomposes interactive scenes into local deformable fields and samples the interaction-relevant 3D points to control individual interactive objects in a complex scene. An interaction-relevant language embedding method that generates interaction-relevant varying language embeddings to localize and control interactive objects.

- Construct the first scene-level physical interaction datasets OminiSim and InterReal, containing 28 subsets and 70 interactive objects. Extensive experiments demonstrate the SOTA performance in novel view synthesis, video frame interpolation, and scene interactive control.

Pipeline

Interactive Dataset

More Demos

Click the left and right button on the side of video to preview different scenes.

Acknowledgement

We adapt codes from some awesome repositories, including Nerfstudio, Omnigibson, Kplanes, LeRF and Conerf. Thanks for making the code available! 🤗

Citation

If you use this work or find it helpful, please consider citing: (bibtex)

@misc{livescene2024,

title={LiveScene: Language Embedding Interactive Radiance Fields for Physical Scene Rendering and Control},

author={Anonymized Author},

year={2024},

eprint={2406.16038},

archivePrefix={arXiv},

}